Exploring the Latest AI Innovations: DeepSeek, Qwen Series, and Multimodal Model Advancements

Hey there, tech enthusiasts! Today, I’m diving into the exciting world of AI innovations, particularly the latest developments in language, image, and audio models. These advancements are not just technical milestones; they’re reshaping how we interact with technology and opening up new possibilities for the future.

1. DeepSeek LLM and the Rise of Advanced Language Models

First up is DeepSeek LLM. This 67 billion parameter language model has been a game-changer. It’s trained on a massive 2 trillion tokens, covering both English and Chinese. What’s fascinating about DeepSeek is its open-source approach, making it accessible for research communities. The model’s ability to understand and generate complex texts is simply mind-blowing. It’s a testament to how far we’ve come in natural language processing.

2. Qwen Series: Bridging Languages and Cultures

The Qwen series, especially the 72B and 1.8B models, are another set of marvels. Developed by Alibaba Cloud, these models are not just about size; they bring a nuanced understanding of multiple languages, including Chinese and English. The 1.8B model, in particular, is interesting for its efficient deployment and support for long context lengths. These models signify a leap in making AI accessible and useful across diverse linguistic landscapes.

3. Qwen Audio: Revolutionizing Audio to Text

Qwen Audio is a standout in the audio-to-text transformation. Unlike traditional transcription models, it goes beyond mere transcription to provide reasoned text outputs. This capability is significant as it paves the way for more intuitive and context-aware audio processing.

4. XVERSE 65B and AquilaChat2-70B: The Multilingual Maestros

XVERSE 65B and AquilaChat2-70B, represent the strides made in multilingual language processing. Their ability to handle extensive context lengths and support multiple languages marks a crucial step towards more inclusive AI technologies.

5. RWKV: A Revolutionary Approach in AI

RWKV, an RNN model, is notable for its efficiency. It competes with transformer models while being lighter to train and having lower memory and time complexity. This model could be a game-changer in making AI more sustainable and accessible.

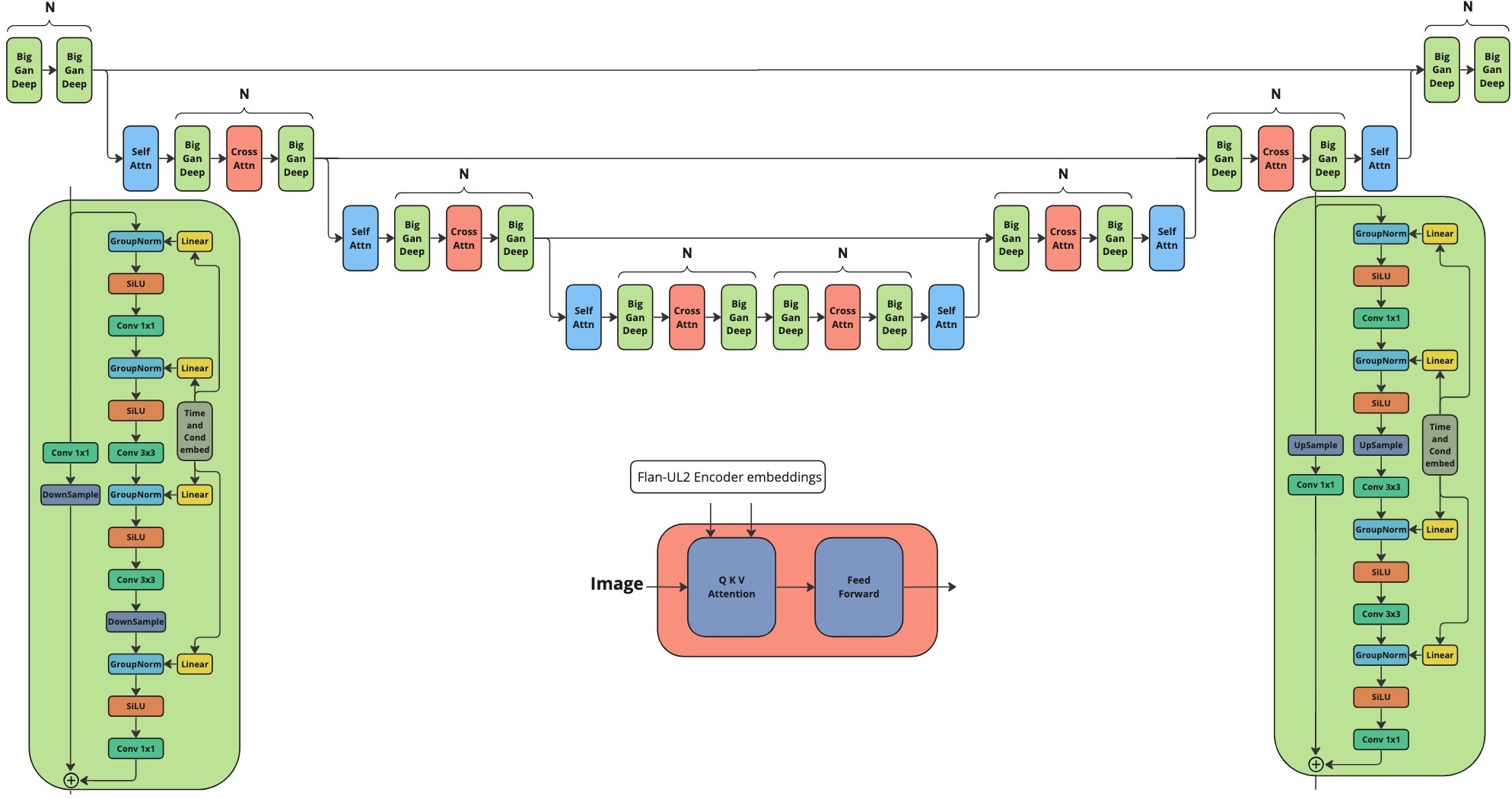

6. Stability AI’s Innovations in Image and Video

Stability AI has been making waves with its image and video models. The Stable Video Diffusion and SD-XL Turbo are particularly noteworthy. They’re not just fast; they’re pushing the boundaries of how we generate and upscale images and videos in real-time.

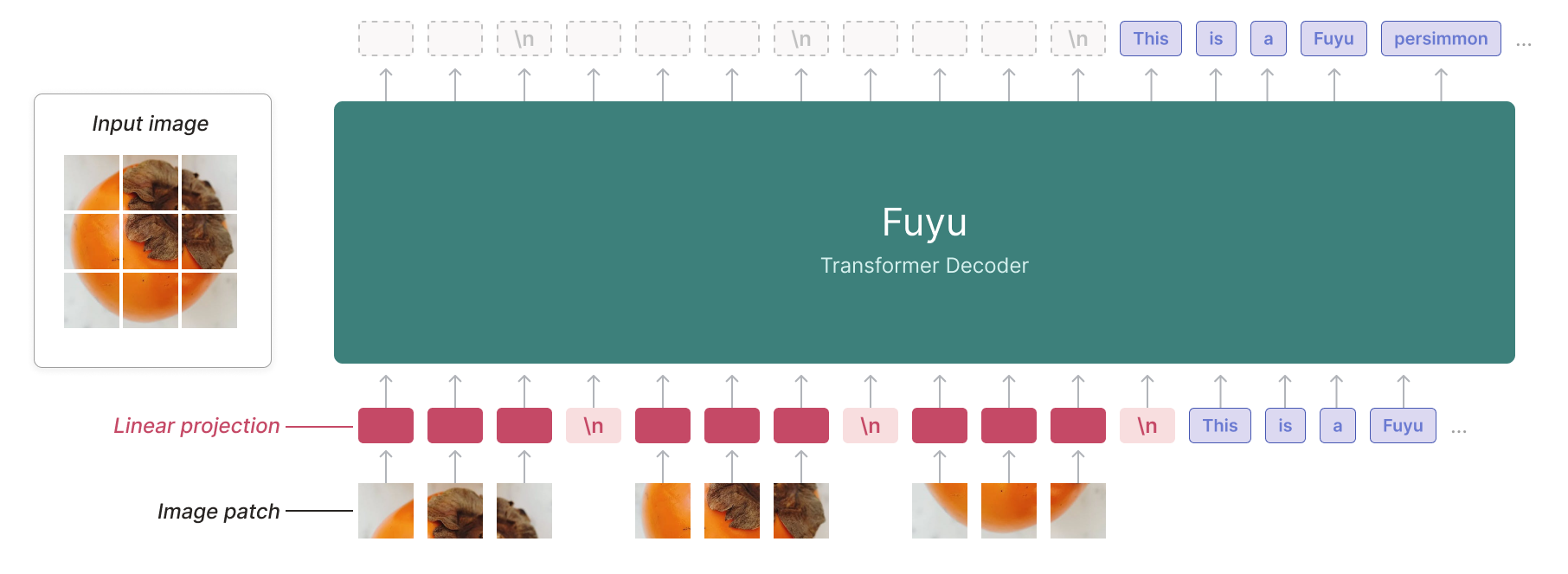

7. Kandinsky and Fuyu: Pioneers in Image to Text

Russia’s Kandinsky continues to evolve, staying relevant with continuous updates. Meanwhile, Fuyu stands out for its image-to-text capabilities, thanks to its unique architecture that tokenizes images.

8. Whisper v3 and MusicGen: Audio Processing Excellence

Whisper v3 has set a new standard in transcription, while Facebook’s MusicGen is redefining music generation. These models show the vast potential of AI in understanding and creating complex audio outputs.

9. Integrating AI into Everyday Tools

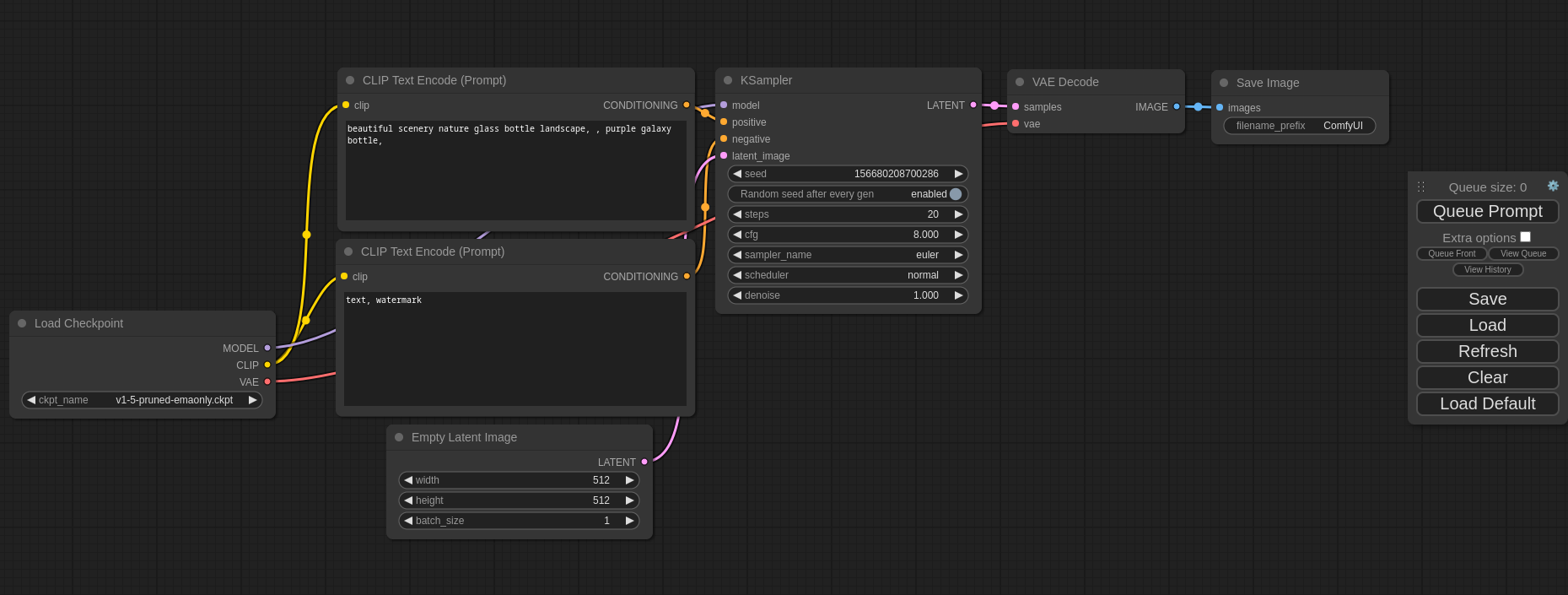

Projects like UniteAI and llama.cpp are integrating these AI models into our daily tools, making them more accessible and usable. ComfyUI is another notable project that brings image and video generation capabilities into a user-friendly web UI.

10. The Future of Multimodal Models

The development of multimodal models, where different AI models are combined to create more powerful and versatile systems, is particularly exciting. This approach could lead to breakthroughs in AI, offering more cohesive and integrated solutions.

FAQ

Q: What makes DeepSeek LLM so special? A: DeepSeek LLM’s vast training data and open-source approach make it a powerhouse in language understanding and generation, especially for English and Chinese texts.

Q: Why are the Qwen models significant? A: The Qwen models, especially Qwen 72B and 1.8B, are important for their multilingual capabilities and efficient deployment, making AI more accessible across different languages.

Q: How does Qwen Audio differ from traditional transcription models? A: Qwen Audio not only transcribes but also provides reasoned text outputs, making it more context-aware and intuitive in processing audio.

Q: What are RWKV’s advantages over transformer models? A: RWKV, being an RNN model, competes with transformer models in efficiency. It’s lighter to train and has lower memory and time complexity, making it more sustainable.

Q: Can you explain the significance of multimodal models in AI? A: Multimodal models combine different AI technologies to create more powerful and versatile systems, leading to more integrated and comprehensive AI solutions.

Keep an eye on these developments, folks! They’re not just shaping the future of AI but also how we interact with the world around us. Stay tuned for more updates!